Microsoft, OpenAI Warn of Nation-State Hackers Weaponizing AI for Cyber Attacks

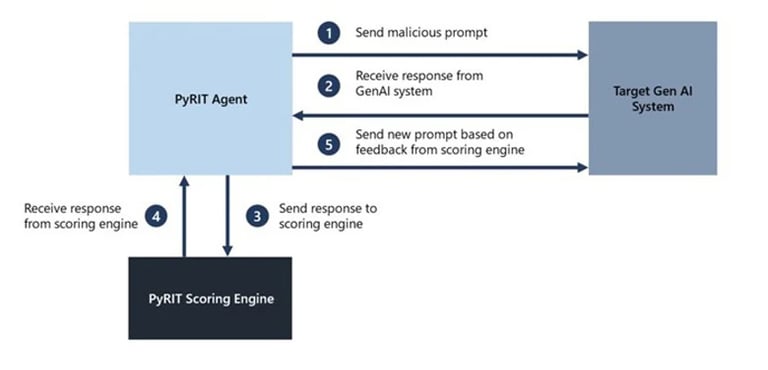

Microsoft has introduced PyRIT (Python Risk Identification Tool), an open-access automation framework aimed at proactively detecting risks in generative artificial intelligence (AI) systems.

BLOGS

Microsoft has introduced PyRIT (Python Risk Identification Tool), an open-access automation framework aimed at proactively detecting risks in generative artificial intelligence (AI) systems.

According to Ram Shankar Siva Kumar, the AI red team lead at Microsoft, the purpose of this red teaming tool is to empower organizations worldwide to responsibly innovate with the latest advancements in artificial intelligence.

PyRIT is capable of evaluating the resilience of large language model (LLM) endpoints against various harm categories, including fabrication (e.g., hallucination), misuse (e.g., bias), and prohibited content (e.g., harassment).

Moreover, it can detect security risks such as malware generation and jailbreaking, as well as privacy concerns like identity theft.

"This allows them to have empirical data on how well their model is doing today, and detect any degradation of performance based on future improvements."

That said, the tech giant is careful to emphasize that PyRIT is not a replacement for manual red teaming of generative AI systems and that it complements a red team's existing domain expertise.

In other words, the tool is meant to highlight the risk "hot spots" by generating prompts that could be used to evaluate the AI system and flag areas that require further investigation.

Microsoft has recognized that red teaming generative AI systems involves investigating both security and responsible AI risks simultaneously. This process is often more probabilistic due to the significant differences in generative AI system architectures.

Ram Shankar Siva Kumar emphasized that while manual probing is time-consuming, it is essential for identifying potential blind spots. Automation is necessary for scalability but cannot fully replace manual probing.

This development coincides with Protect AI's disclosure of multiple critical vulnerabilities in popular AI supply chain platforms like ClearML, Hugging Face, MLflow, and Triton Inference Server. These vulnerabilities could lead to arbitrary code execution and the disclosure of sensitive information.